Following the recent launch of the Catalogue of Bias on the website of the Centre for Evidence-Based Medicine, Jeff Aronson explores the origins and uses of the word “bias” in the first of three blogs.

The term “bias”, as used in evidence-based medicine, has been defined in different ways. My aim in these blogs is to explore various aspects of bias, in order to develop a definition that encompasses all the entries in the Catalogue of Bias. In this first blog. I trace the origins of the word.

The term “bias”, as used in evidence-based medicine, has been defined in different ways. My aim in these blogs is to explore various aspects of bias, in order to develop a definition that encompasses all the entries in the Catalogue of Bias. In this first blog. I trace the origins of the word.

Etymology and usages

The word “bias” goes back to an Indo-European root that doesn’t look at all related—SKER. In its basic form, this root, one of whose primary meanings is to cut, gives rise to a wealth of English words with connotations of cutting, such as shear, shears, and sheer, score, scar, scabbard, scarp and escarpment, scrabble, scrub and shrub, scurf, shard, sharp, short and skirt, skirmish, scrimmage, and scrum.

Variants on the related root KER give us words such as cortex and decorticate, curt and cutlass. The Greek adjective κάρσιος, karsios, meant [cut] crosswise. Adding the prefix ἐπι, giving a sense of motion, gave ἐπικάρσιος, epikarsios, which also meant crosswise, but often in the more restricted sense of running at right angles, describing, for example, a striped garment, the planks of a ship, or a grid of streets. It was also used to describe coastlines, as opposed to paths running inwards perpendicular to the coast. And from ἐπικάρσιος, with consonantal shift and elision, and via French biais, comes bias.

Bias in bowling

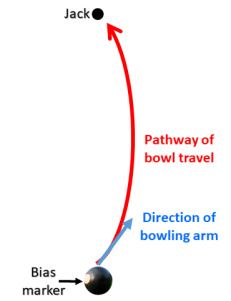

A well-known example of bias is found in the two varieties of the British sport of bowls. Crown Green Bowls is played outdoors on a grass or artificial lawn with a raised centre (the crown), which is up to 12 inches (30 cm) higher than the periphery of the green, and from which the lawn slopes down unevenly on all sides. In Flat Green Bowls, which can be played outdoors or indoors, there is no crown. In both variants, a ditch surrounds the green, which is usually rectangular or square. The size of the green is not specified, but outdoor greens are on average 33–44 yards (30–40 m) in length and of variable width, typically up to 66 yards (60 m). The area is divided in imagination into rectangular strips called rinks, each about 5–6 yards wide, usually running parallel to the sides, in which pairs or teams of players compete. First, a jack, a miniature bowl, is rolled from one end of the rink to the other, typically at least 21 yards (19 m) away. The object of the game is then to roll your bowl along the ground so that it stops as close to the jack as possible. But here’s the catch. Both the jack and the bowls are asymmetrically shaped, and this asymmetry, known as bias, distorts the direction in which they travel—in a curve, not a straight line (see Figure 1). All the other modern meanings of “bias” come from this meaning.

Figure 1. Bias in bowling

Therefore, when it entered English in the middle of the 16th century, “bias” meant an oblique or slanting line (a bias line), like the diagonal of a quadrilateral or the hypotenuse of a triangle, or a wedge-shaped piece of cloth cut into a fabric. It then came to be applied to the run of a bowl and hence “the construction or form of the bowl imparting an oblique motion, the oblique line in which it runs, and the kind of impetus given to cause it to run obliquely” (Oxford English Dictionary).

Shakespeare used the word eleven times in eight plays. For example, in The Taming of the Shrew (iv:6:25) he used it in its original literal meaning: “Well, forward, forward. Thus the bowl should run, And not unluckily against the bias.” And again, in Troilus and Cressida (iv:6:8), “Blow, villain, till thy spherèd bias cheek Outswell the colic of puffed Aquilon”. However, in most cases he used it figuratively, as in Richard II (iii:4:4): “Twill make me think the world is full of rubs, And that my fortune runs against the bias.” And in King John (ii:1:575): “Commodity, the bias of the world.” In this usage, the word means “an inclination, tendency, or propensity, and hence a predisposition, predilection, or prejudice”, the sense in which the word is most commonly used nowadays in general parlance.

It wasn’t until about the start of the 20th century that the idea of bias was introduced into statistics, defined as “a systematic distortion of an expected statistical result due to a factor not allowed for in its derivation; also, a tendency to produce such distortion” (OED). The term “distortion” here is particularly apt, since it comes from the Latin verb torquere, meaning to twist or turn to one side, just like a bowl does on a bowling green.

Other technical meanings have also emerged, for example in telegraphy and electronics. More recently terms such as “bias attack”, “bias crime”, and “bias offence” have emerged to describe various forms of so-called hate crime.

In my next blog, I shall consider catalogues of biases and previous definitions. In my final blog, I shall propose a definition of “bias” that is relevant to the entries in the Catalogue of Bias.

Jeffrey Aronson is a clinical pharmacologist, working in the Centre for Evidence Based Medicine in Oxford’s Nuffield Department of Primary Care Health Sciences. He is also president emeritus of the British Pharmacological Society.

Competing interests: None declared.