Following the recent launch of the Catalogue of Bias on the website of the Centre for Evidence-Based Medicine, Jeff Aronson continues, in the second of three blogs, his investigation into the word “bias”, surveying catalogues and previous definitions.

In 1979 David Sackett, crediting the help of a clinical epidemiology graduate student, JoAnne Chiavetta, made what appears to have been the first attempt to classify the types of biases that can occur in observational studies, which he called “analytic research”. “To date,” he wrote, “we have catalogued 35 biases that arise in sampling and measurement.” In an appendix to the paper, he divided the sources of biases into these two categories and added five others, in which he included a further 21 varieties, making 56 in all. He gave references to 33 of them, implying that he was describing the other 23 for the first time, giving them original names.

Later catalogues are listed in Table 1.

Table 1. Some catalogues of biases

| Source |

Description |

| Delgado-Rodríguez & Llorca (2004) |

69 biases classified as belonging to one of four major groups (information bias, selection bias, confounding, and execution of an intervention) and several subgroups |

| Choi & Pak (2005) |

48 biases detected in questionnaires, with examples of each, categorized according to the ways in which individual questions are designed, the ways in which the questionnaire as a whole is designed, and how the questionnaire is administered |

| Chavalarias & Ioannidis (2010) |

235 examples of biases that were mentioned in PubMed in at least three articles each; they listed the 40 most commonly cited biases and constructed a network showing how frequently they were discussed and the links among them |

| Porta M (editor) A Dictionary of Epidemiology (6th edition, 2014) |

53 biases defined in separate dictionary entries; four other biases mentioned in the text |

| Oxford’s Centre for Evidence Based Medicine (CEBM) Catalogue of bias (2018) |

See text |

In 2017, taking its cue from one of Sackett’s suggestions, the Centre for Evidence-Based Medicine (CEBM) in Oxford launched its online Catalogue of Bias, in which individual biases are defined and described, with practical examples, information about the effects they are likely to have on the results of clinical studies, and methods for preventing or analysing them. As I write, the catalogue has over 50 entries either posted online or in preparation.

Previous definitions

Good definitions give clear explanations of concepts and their importance and may give insights into their potential impact. They allow us to agree on what we are talking about, avoiding ambiguity. They can highlight cultural differences so that misunderstandings can be avoided. And studies based on uniform definitions can be readily compared in systematic reviews.

Elsewhere I have described in detail my approach to crafting definitions. Briefly, it involves studying the etymology and usages of the definiendum (the word or term to be defined), considering definitions that others have proposed, and taking into consideration how the processes involved actually operate. I then list the characteristics that seem to be the most important and use them to create a definition.

In my previous blog about bias, I discussed its etymology and its usages since the 16th century. Here I discuss definitions that others have proposed in statistics and epidemiology.

I have searched widely for definitions of “bias” in books and journal articles. In many cases authors offer no definition at all. Some of those that do define “bias” use Sackett’s definition. In Table 2 I have listed other definitions that I have found. This is not a complete list, but other definitions contain all the elements included in the definitions listed in the Table.

Note that this is a heterogeneous group of definitions, in that some define the count noun “bias”, where each bias is an individual example of the phenomenon, while others define the non-count noun, which is the phenomenon itself. Failing to distinguish these two uses can lead to ambiguity. I shall concentrate on defining the count noun.

Table 2. Published definitions of “bias” in statistics, epidemiology, and sociology

| Source |

Definition |

| Nisbet (1926) |

In an experiment with a finite number of chance results, if one of the factors, on which the result of the experiment is dependent, is related physically, in a special way, to some of the alternatives, then these alternatives are biassed [sic] |

| Murphy EA. The Logic of Medicine (1976) |

A process at any stage of inference tending to produce results that depart systematically from the true values |

| Sackett D (1979) [Based on Murphy 1976] |

Any process at any stage of inference which tends to produce results or conclusions that differ systematically from the truth |

| Schlesselman JJ. Case-Control Studies: Design, Conduct, and Analysis (1982) |

Any systematic error in the design, conduct or analysis of a study that results in a mistaken estimate of an exposure’s effect on the risk of a disease |

| Steineck & Ahlbom (1992) |

The sum of confounding, misclassification, misrepresentation, and analysis deviation |

| Hammersley & Gomm (1997) |

[One of several potential forms of] systematic and culpable error … that the researcher should have been able to recognize and minimize [they also refer to other interpretations, such as: any systematic deviation from validity, or to some deformation of research practice that produces such deviation] |

| Elliott P, Wakefield JC. In: Spatial Epidemiology (2000) |

Deviation of study results (in either direction) from some “true” value that the study was designed to estimate |

| Delgado-Rodríguez & Llorca (2004) |

Lack of internal validity or incorrect assessment of the association between an exposure and an effect in the target population in which the statistic estimated has an expectation that does not equal the true value |

| Choi & Pak (2005) |

A deviation of results or inferences from the truth, or processes leading to such a deviation |

| Sica (2006) |

A form of systematic error that can affect scientific investigations and distort the measurement process |

| Paradis (2008) |

A systematic error, which undermines a study’s ability to approximate the truth |

| Cochrane Methods Group |

A systematic error, or deviation from the truth, in results or inferences [continues: Biases can operate in either direction …] |

| CONSORT |

Systematic distortion of the estimated intervention effect away from the “truth”, caused by inadequacies in the design, conduct, or analysis of a trial |

| A Dictionary of Epidemiology (6th edition, 2014) |

Systematic deviation of results or inferences from the truth. … An error in the conception and design of a study—or in the collection, analysis, interpretation, reporting, publication, or review of data—leading to results or conclusions that are systematically (as opposed to randomly) different from the truth |

| Schneider D, Lilienfeld DE. Lilienfeld’s Foundations of Epidemiology. 4th edition. (2015) |

A systematic error that can creep into a study design and lead the epidemiologist to inaccurate findings |

Common features in definitions

From the frequencies of their appearance in these 15 definitions, the following features emerge as being important.

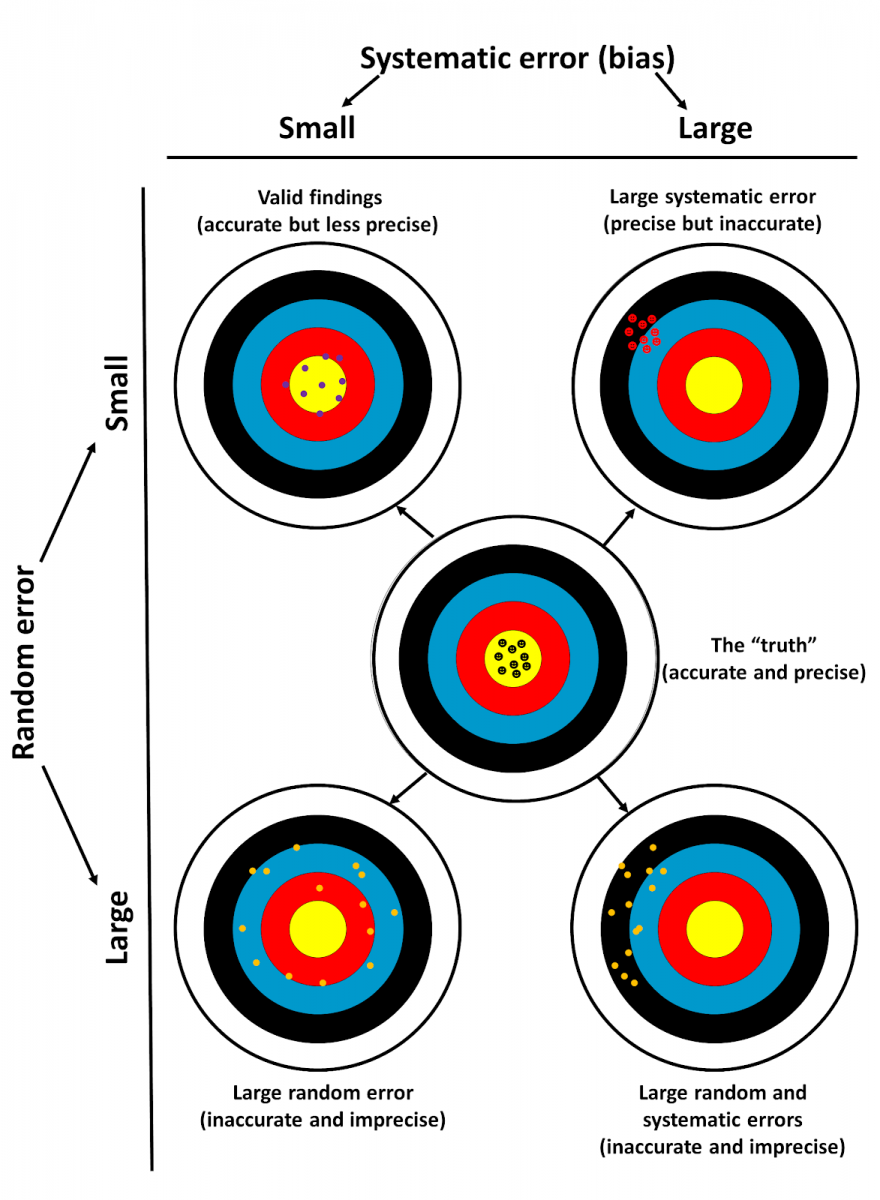

Systematicity: Ten of the definitions make it clear that biases arise from systematic rather than random processes. Figure 1 shows how apparent outcomes vary depending on the relative amounts of systematic and random errors, dichotomously categorized.

Truth: Nine of the definitions refer to the true outcome, or truth, as that from which bias causes deviation. However, I believe that it is preferable to refer to the probability of an outcome, or the outcome that would be found in the absence of bias, rather than implying that there is some definitive truth to be discovered. Reflecting this, four definitions include the word “estimate[d]”, even though three of them also included the words “true” or “truth”.

Error: Eight definitions refer to “error” or related words, such as “incorrect” and “inaccurate”.

Deviation or distortion: These words feature in seven definitions. They refer to the extent to which the apparent result of a study differs from the result that would be expected were there to be no bias. In relation to this, “results” appears in seven definitions and “expectation” in two.

The elements affected: Six definitions refer to the conception, design, and conduct of a study, and the collection, analysis, interpretation, and representation of the data, all of which may be affected by biases. I take “representation” here to mean the figurative and tabular display of data, to which one could, therefore, add discussion of its relevance, which might also be subject to bias.

Direction: Two definitions highlight the fact that a distortion can occur in either direction, underestimating or overestimating the effect that would emerge in the absence of a bias; I consider it important to stress this.

Figure 1. How the results of a study may deviate from the “truth” (middle target)

- Top left: a well-designed study should yield results that are precise although they will probably be less accurate than the “truth”; this is commonly called good internal validity

- Top right: bias, due to large systematic error, gives results that are accurate but imprecise

- Bottom left: large random error gives results that are neither accurate nor precise

- Bottom right: a combination of large systematic and random errors

This information will be useful when in the third and final blog in this series, I shall explore an operational approach to bias and suggest definitions that are relevant to the entries in the CEBM’s Catalogue of bias.

Jeffrey Aronson is a clinical pharmacologist and Fellow of the Centre for Evidence-Based Medicine in Oxford’s Nuffield Department of Primary Care Health Sciences. He is also president emeritus of the British Pharmacological Society.

Competing interests: None declared.